Introduction

You can call it new SOA, you can call it microservices, or something else. Architectural best practices tend to change and/or appear under different names after certain periods of time. However, there is just one thing unchanged - logic. Terms "flexible" and "adjustable" are pretty dark and broad. I want to tell about the key aspects of advanced, not legacy and safe architectures. Another way you may look at this article is as a guide for auditing the solution architecture as well as planning the design upgrade according to the technical priorities of the business system.

If you're a good Architect, you can figure out the most reasonable architecture for some specific system at some specific point of time, based on known priorities. The major problem is - there is no general perfect architecture. Industry, science and business are moving forward fast as a light-speed rocket, so does technology. In the era of data all over the place, technologies and frameworks are polishing themselves, offering new innovative approaches. The moment will come when you have to make changes to the architecture that used to be perfect, and it's not bad - we just have to be armed and ready for that.

Not really about code

Note:

The term Architect is pretty broad too. In this context, I assume that there is a Software Architect, responsible for the code parts of the software module. Some companies don't have such a position. They just have Technical Leads for some specific functionalities. The Architect I am talking about is assumed to be responsible for a much wider area than Software Architects and look less at the code and more at the integration parts of the overall system. They take into account the dirtiest parts that are almost always left without attention, their goal is to make sure all the parts of it will be developed, tested and delivered with a proper quality and on time. Such people are very valuable and usually work across multiple projects within a company.

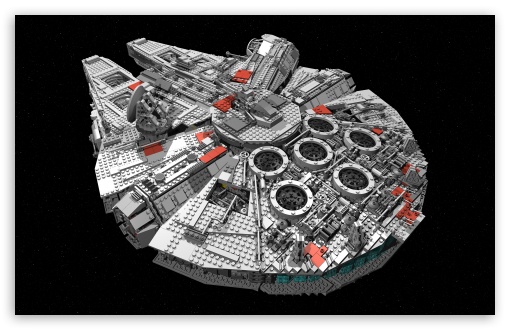

Imagine... two different architects building a spaceship. First one decided to use paper and built/glue a nice ship.

He also decided to put his spaceship into the nice transparent protection screen, that fit the spaceship size perfectly.

The second architect decided to build a spaceship from Lego parts. Lego parts are composable and firm enough so they don't need a special protection screen.

Both ships worked fine and served their purpose. However, the new power blocks were introduced sometime later and the architects were challenged to introduce the change in their systems.

The paper architect started stressing out, because they have to land the ship to the station to remove the old screen, glue the new power blocks and apply the new bigger sized protection screen. He had to rebuild the spaceship and deliver the new version of the spaceship. He had all the construction templates, so rebuilding the ship wasn't a new thing for him, but he and all his team spent a lot of time to perform the operation.

The Lego architect didn't have to land the spaceship. He had figured out which part of it is affected by the change and gathered the power team together to perform the power system replacement on the fly. They have developed the new blocks and added them to the spaceship as soon as they were ready, without distracting other spaceship crew.

Later, the Lego architect spent some time in the research laboratory thinking about how he can optimize the build and deployment process, because the team spends a lot of time on it. He figured out that for spaceship development purposes he and his team can use another kind of materials and a smaller construction, which they want to be able to make experiments with. So, they requested a 3D printer, uploaded all the construction templates, created the build pipeline and were able to automate some routine tasks.

Of course, this is a toy example. But what can we learn from it? Even though both architects performed the task and it was successfully functioning for some period of time, they had to face with changes and adopt their system. Complexities started to appear on the integration phase, related not to the fulfillment of the original goal, but more to operational flexibility and adjustability, modularity.

Of course, software architecture is extremely important. But it's not enough to just have a good code to deliver and maintain a decent solution. Because it's not only about code architecture. It's about what is happening between the software components that we write, about where they are stored, developed, tested and deployed, how they discover each other, how they interact with each other, what is happening between them, what is the approach for integration, how easy it is to introduce changes, etc.

These kinds of activities are not just writing code are very important part of building the solution, take time and influence the result a lot.

Characteristics to take into account

Follow such principles as modularity, loose coupling, share-nothing architecture, reduce the number of dependencies between components, watch the dependencies tree of your services to be aware of potential chained failures and other consequences.

Determine responsibilities of separate components based on the specific domain context or business capabilities. DDD is a good architectural foundation.

Compose your services in an isolated fashion to provide the ability to operate and change the functionality within the service without affecting other services. If there is a need to synchronize updates of many services that means (in the most cases) they're not loosely coupled enough. Of course, there are exceptions to any smart rule. For example, you might want to deploy your system on some IoT device, then you may decide to deploy all components at once, and it's absolutely fine. The point is, keep your services smartly loosely coupled to allow the flexibility of deployment on various platforms you have to support. However, don't think that you can fully escape coupling, it will always be there to some extent. Sometimes trying to put additional abstraction layers for the sake of loose coupling makes the solution on the contrary more complicated than flexible. The important thing is to find the the pieces of software the functionality of which is rarely intersected and changed together. Try to pick out those parts and see if they are worth being a separate all-sufficient service. If yes - it may be the right decision to put them close together in the microservice, following the high cohesion principle.

Keep in mind that it should be easy to introduce new components of the system and replace existing ones.

Aim for the stateless architecture which is a ground to support easy system scale out.

Bring your close attention to how the communication between services and components is done. Do a research of interaction protocols to determine which of them are most suitable for you, i.e. in terms of speed or ease of usage.

Infrastructure, configuration, testing, deployment, operations

Define the strategy for configuration management because the same-moment propagation of configuration changes is very important in the systems that need atomic updates and in general.

Today, it is a suicide to have a big data-intense solution without automated infrastructure and well-established processes for building, testing and deploying it.

Spare enough time to plan the environments and infrastructure for development, testing, production and whatever else.

Testing process and strategy are crazy important. Some of the practical best practices are to use Blue-Green deployments, Canary releases/Dark canaries, A/B testing. Build your test environment as much like production environment as you can. At least a topology should always be the same. Always do stress and load testing, try to perform testing on the production environment as soon as possible, because it is the most precise way of finding out the real-world failures.

Adjustable architecture also means flexible and independent deployments and ease of infrastructural operations of your services.

Take advantage of immutable infrastructure approach. It makes sure that the build/continuous integration process is based on the pipeline that is stored somewhere and it is the good practice if no changes can be made on the running servers (i.e. disable ssh) - all of the applied changes should go through the defined configuration and build pipeline of operations, which will be then applied on all systems required. This is done to prevent configuration drift, when, for example, one person makes manual changes in one environment, which makes other environments inconsistent (e.g. changing the database schema).

It should not be critical for the development teams what infrastructure to rely on. One day you might have to step away from the current infrastructure, so don't tie yourself to it.

In-between the code

What is in-between the code? Service discovery, requests routing, communication networking layers, proxying, load balancing, just to name a few.

A lot of the production errors appear to happen because of the integration points fail or other things that originate not from the business logic of the system itself, but from the incorrect or poor integration of composing all the pieces together and interactions between them.

With the increased rate of change, keep an eye on all the moving pieces of the system, always keeping availability in mind, think about scaling, respond to issues in a predictable and defined way and build the plan to mitigate risks.

Failures everywhere

Be paranoid. Design the architecture for failure - enumerate everything that might go wrong and it will. Brainstorm and question all the things that can fail and protect such places.

What if the connection cannot be established or fails?

What if it takes more than expected?

What if the request fails with no clear response or incorrect answer?

What if there is no acknowledgement returning back?

What if billion requests appear concurrently?

What if the server crashes? A rack? A data center?

What if the database gets corrupted?

What if deployment fails during the install?

What if production system new piece fails after new deployment?

Integration kind of errors can be amazingly different. So, what can we do to avoid them?

Use techniques such as circuit breaker, timeouts, handshaking, bulkheads - they will help you to protect the integration points of the system.

Bulkheads can prevent chain reactions from ruining the entire service, partitions capacity to preserve partial functionality when something bad happens.

Circuit Breaker protects your system by preventing calls to the failed integration point. It is helpful for the clients of the parts of the system that are down. If the Circuit Breaker became active - it means, there is a big problem that you should investigate, analyze and log.

Be careful with the code you cannot directly see, watch your dependencies and any shared resources.

In addition to having the correct testing and deployment processes, aim to use realistic data volumes and quantitative ratios between the topological elements of the system when testing.

To prevent common issues like loosing the availability - track the responsiveness of your system, notice when the average response time is getting increasingly slow (programmatically) and take action.

It is a must have practice to use logging, monitoring and automation of the system operations. In case of microservices monitoring becomes easier because services are isolated, which helps to detect the fault. Some of the great approaches are to use correlation IDs, common log format while collecting and analyzing logs. Keep in mind that logs can take a lot of space, so consider log aging, i.e. configure log file rotation based on size. There are some nice tools for visualization and dashboards to provide the structured view of important processes.

It's important to maintain the correct versioning of services to make sure that changes to one service do not affect existing clients. It's also pretty common to run several versions of a service side-by-side as separate deployments. Be ready to support backward compatibility for a long time.

Concerns and things to always keep in mind

In most cases you already have some working solution, which has the problems with deployment, operations and architectural flexibility. You might want split your monolith into the services and it should be done carefully. When your solution is split into incorrect components or wrongly bounded services - it's not going to work in the future. Most of the applications started out as monolithic applications and were migrated to microservices.

Here are some basic notes to keep in mind while dividing into services.

Know specific business requirements and the domain before the start of partitioning.

Watch out for shared capabilities and data, it should be modeled correctly.

Migration is better to do by step by step approach, do only reasonable logical things suitable for your system.

It's important that the domain is well understood before you begin partitioning it, as refactoring boundaries is expensive.

Have clear and distinct team/teams structure of working on the services for your solutions.

People, teams, organizational impact

This topic deserves a separate article, because the flexibility of the architecture and the established process described here affects the structural organization of the teams. It gives the teams much more flexibility and opportunities for continuous innovation, where they can efficiently work with their according paces. People are not organized into teams by the technology stack aspect and usually the most efficient way is to keep teams responsible for specific microservices, which would most likely require knowledge of multiple technical stacks. As services may be written in different languages it gives teams some atonomy and freedom.

All this theory is nothing without knowing how to apply it for real in production

Containerization and clustering tools

DockerDocker Swarm

Kubernetes

Mesos

Serf

Nomad

Infrastructure automation / deployment

JenkinsTerraform

Vagrant

Packer

Otto

Chef, Puppet, Ansible

Configuration

EddaArchaius

Decider

Zookeeper

Service Discovery

EurekaPrana

Finagle

Zookeeper

Consul

Routing and Load balancing

DenominatorZuul

Netty

Ribbon

HAProxy

NGINX

Monitoring, tracing, logs

HystrixConsul health checks

Zipkin

Pytheus

SALP

Elasticsearch logstash

Communication Protocols

Protocol BuffersThrift

JSON/XML/Other text

Etc.

Practical references for some tools to apply

Docker Swarm

Provides native clustering capabilities to turn a group of Docker engines into a single, virtual Docker Engine, where a pool of Docker nodes that can be managed as if they were a single machine.

Docker monitoring tools - AppDynamics, Datadog, New Relic, Scout, SignalFx, Sysdig.

Kubernetes

An open-source platform for automating deployment, scaling, and operations of application containers across clusters of hosts, providing container-centric infrastructure.

Apache Mesos

Abstracts CPU, memory, storage, and other compute resources away from machines (physical or virtual), enabling fault-tolerant and elastic distributed systems to easily be built and run effectively.

Mesosphere

Official documentation says : "The DCOS is a new kind of operating system that spans all of the machines in your datacenter or cloud and treats them as a single computer, providing a highly elastic and highly scalable way of deploying applications, services, and big data infrastructure on shared resources. The DCOS consists of everything necessary to build out a self-healing, fault-tolerant, and scalable solution." To have a better understanding of what exactly Mesosphere is look at this post.

Mesos Aurora

Runs applications and services across a shared pool of machines, and is responsible for keeping them running, forever. When machines experience failure, Aurora intelligently reschedules those jobs onto healthy machines. Supports rolling updates with automatic rollback, which is useful while doing rolling deployments of microservices.

Vagrant

A tool for building complete development environments in a virtual machine.

Packer

A tool for creating machine and container images for multiple platforms from a single source configuration. A good article on Vagrant and Packer that gives a lot of intuition about the use cases and differences.

Terraform

A tool for building, changing, and versioning infrastructure safely and efficiently, multi vendor cloud infrastructure automation.

Consul

Provides an HTTP API that we can use for service announcement and discovery as well as a DNS interface. In addition to service discovery Consul can perform service health checks, which can be used for monitoring or service discovery routing. Consul also has a very nice templating client, which can be used to monitor service announcement changes and generate proxy client configurations. Other features include a key/value store, multi-datacenter support.

Eureka

REST based service that is primarily used in the AWS cloud for locating services for the purpose of load balancing and failover of middle-tier servers.

Ribbon

An Inter Process Communication, remote procedure calls, library with built in software load balancers. The primary usage model involves REST calls with various serialization scheme support.

Zuul

Zuul is the front door for all requests from devices and web sites to the backend of the Netflix streaming application. As an edge service application, Zuul is built to enable dynamic routing, monitoring, resiliency and security. It also has the ability to route requests to multiple Amazon Auto Scaling Groups as appropriate.

Finagle

An extensible RPC system for the JVM, used to construct high-concurrency servers. Finagle implements uniform client and server APIs for several protocols, and is designed for high performance and concurrency. Most of Finagle's code is protocol agnostic, simplifying the implementation of new protocols.

Zipkin

A distributed tracing system that helps to gather timing data.

Hystrix

A latency and fault tolerance library designed to isolate points of access to remote systems, services and 3rd party libraries, stop cascading failure and enable resilience in complex distributed systems where failure is inevitable.

Zookeeper

A centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services.. Mesos uses zookeeper for coordination.

Etcd

Distributed, consistent key-value store for shared configuration and service discovery. Supports curl'able user-facing API (HTTP+JSON), optional SSL client cert authentication, benchmarked 1000s of writes/s per instance, distributed using Raft.

Protocol Buffers

Google's language-neutral, platform-neutral, extensible mechanism for serializing structured data. You define how you want your data to be structured once, then you can use special generated source code to easily write and read your structured data to and from a variety of data streams and using a variety of languages.

Thrift

Allows you to define data types and service interfaces in a simple definition file. Taking that file as input, the compiler generates code to be used to easily build RPC clients and servers that communicate seamlessly across programming languages.

Materials on architecture, SOA and microservices

Service-Oriented Architecture: Concepts, Technology, and DesignSOA Patterns

Release It!

Building Microservices

Microservices: Flexible Software Architectures

Architecting for Scale

Microservices with Docker on Microsoft Azure Unleashed

Spring Cloud